Press Kit

Summary

Price: $4.99, No In-App Purchases or Subscriptions

Platforms: iOS 16+, iPadOS 16+, macOS 13+, visionOS 1.0+

Version: 3.0

Languages: English, Spanish

Privacy Label: No Data Collected

Age Rating: 17+

Size: 520 MB

App Store: Download

Press Access

To access a free review unit of the app, please get in contact.

Features

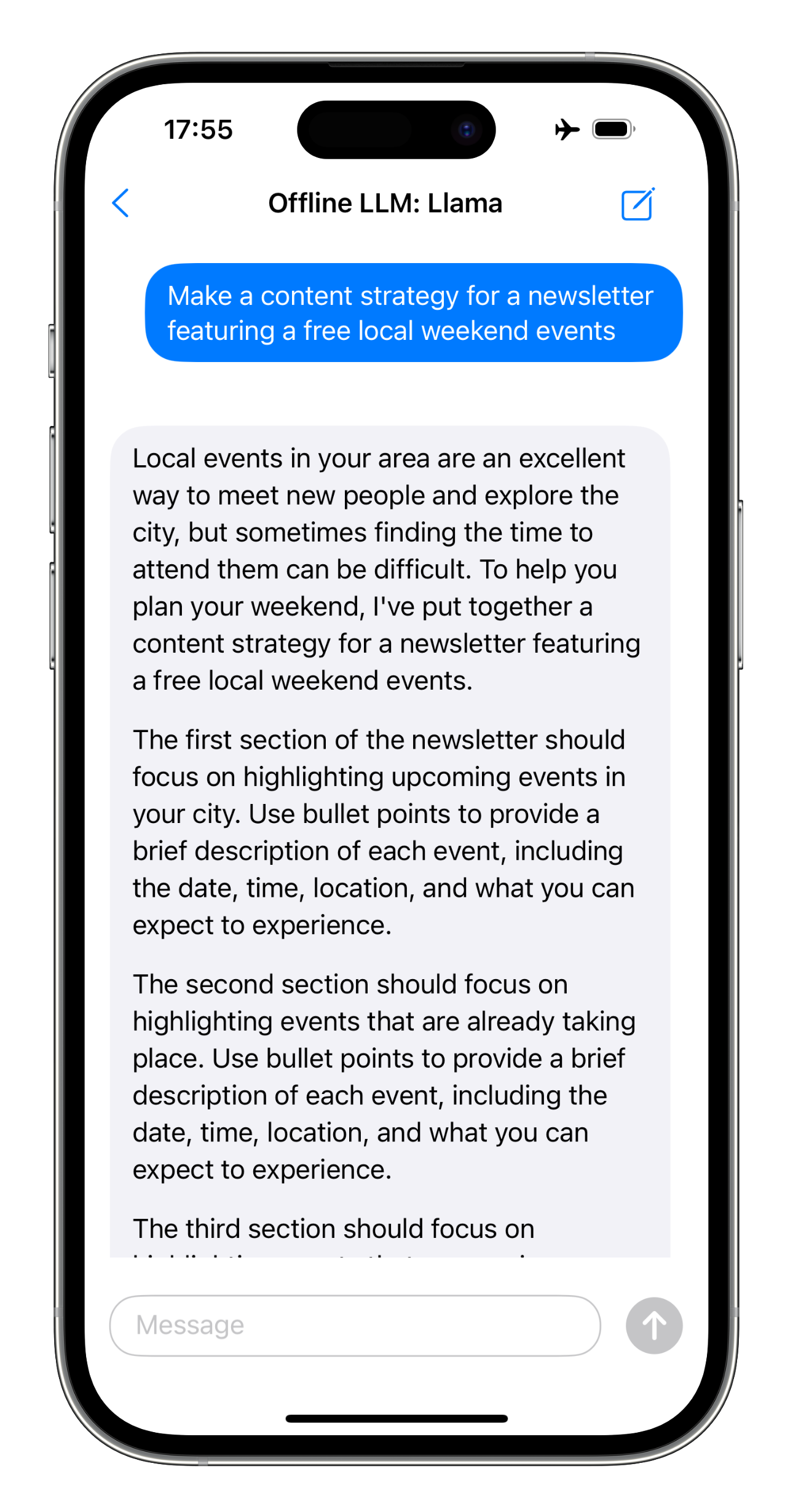

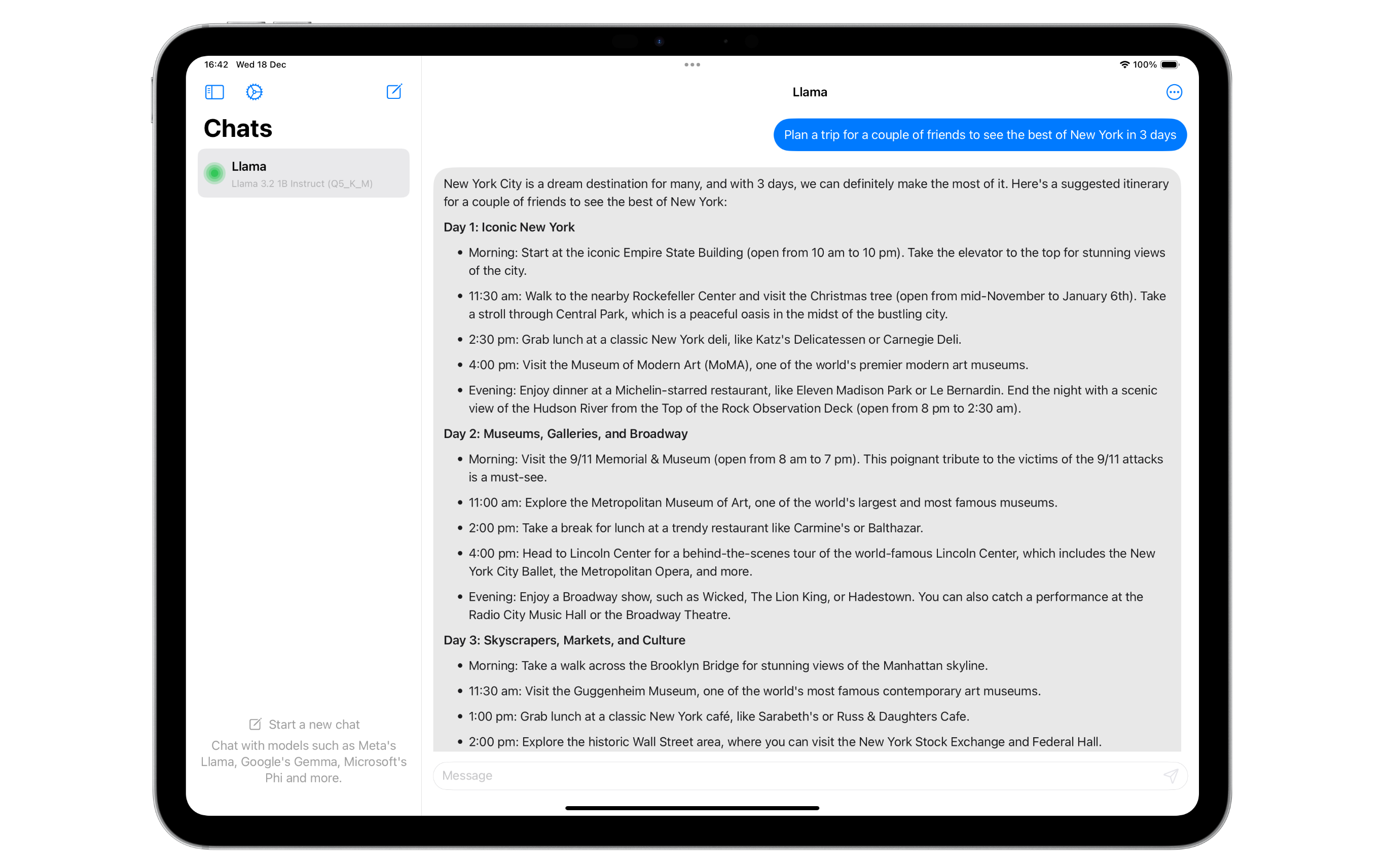

- Run LLMs on-device for iPhone, iPad, Mac and Apple Vision Pro

- The fastest on-device LLM execution engine for Apple Silicon (faster than llama.cpp and MLC)

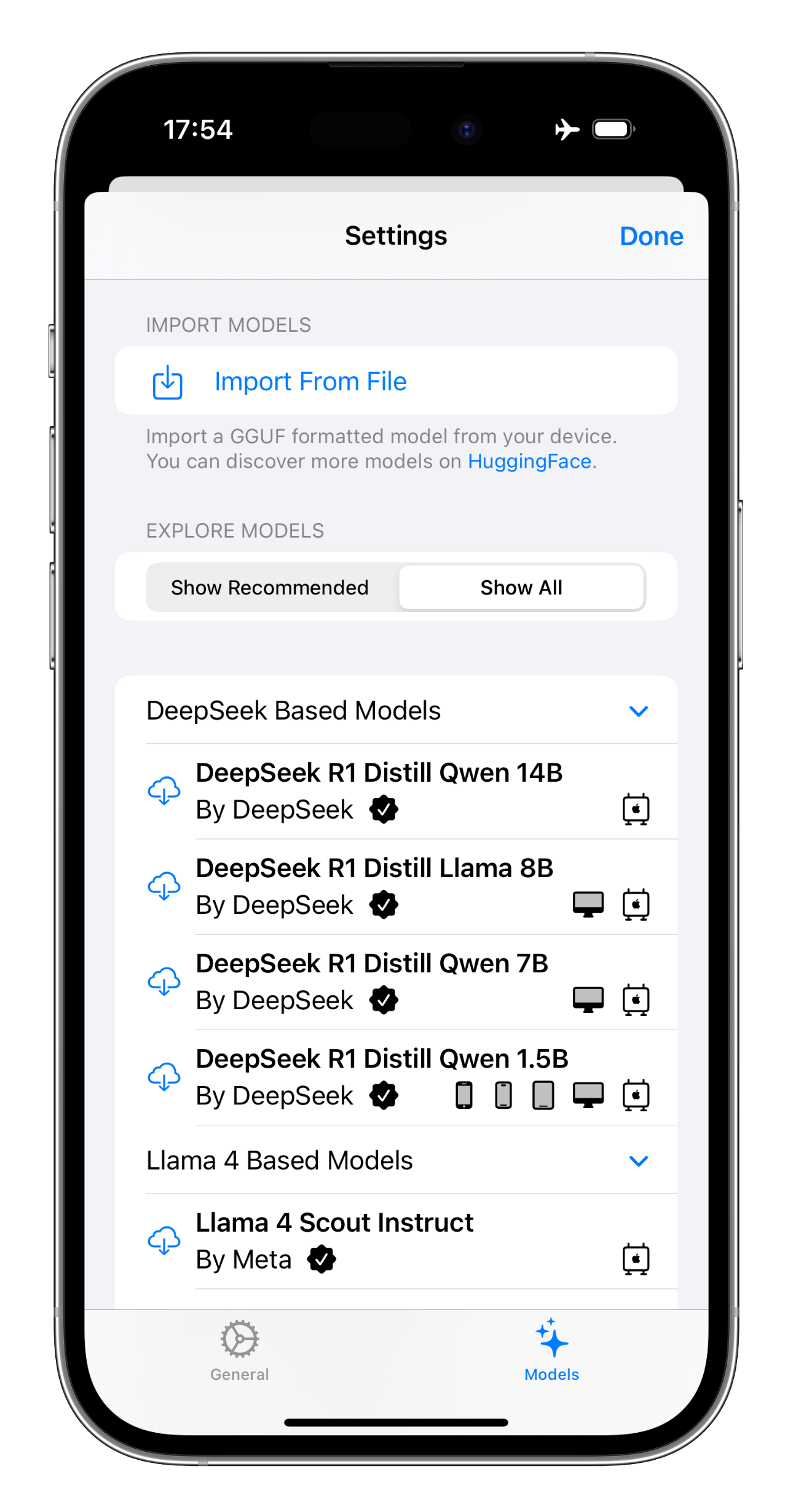

- Install any third-party LLM including DeepSeek, Llama, Qwen, Gemma, Phi, Mistral & more

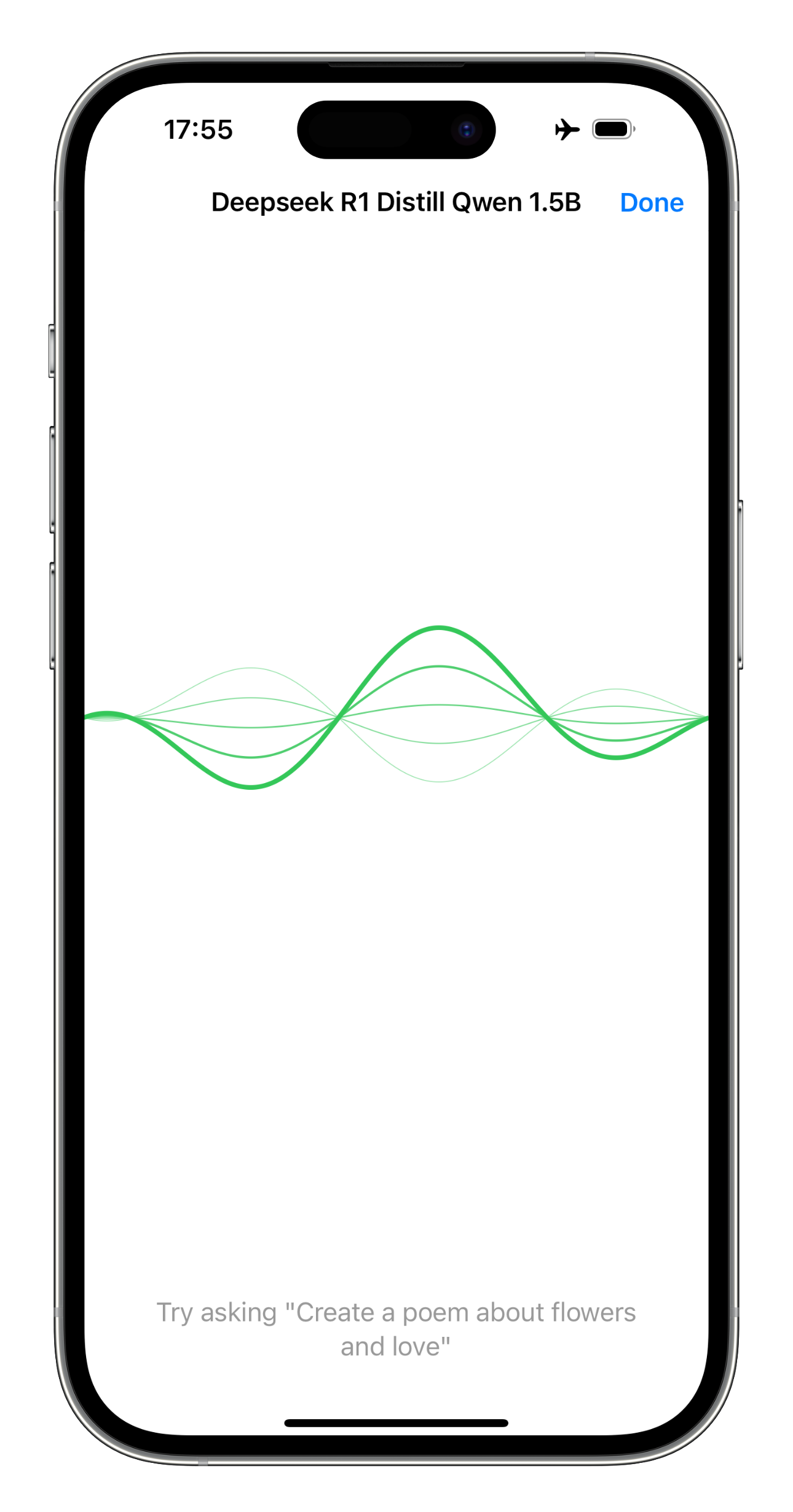

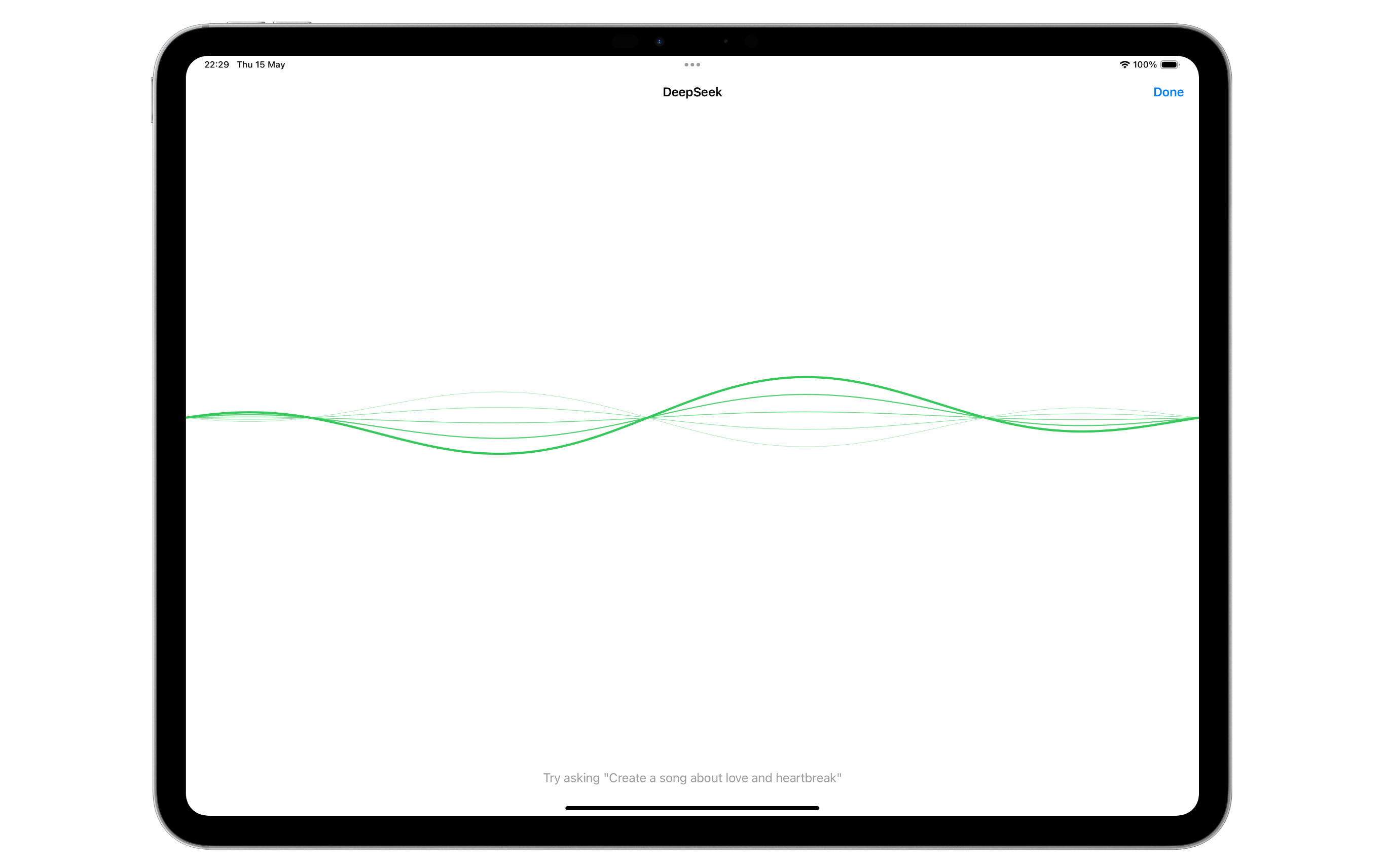

- Live Voice Chat (2 way voice conversations)

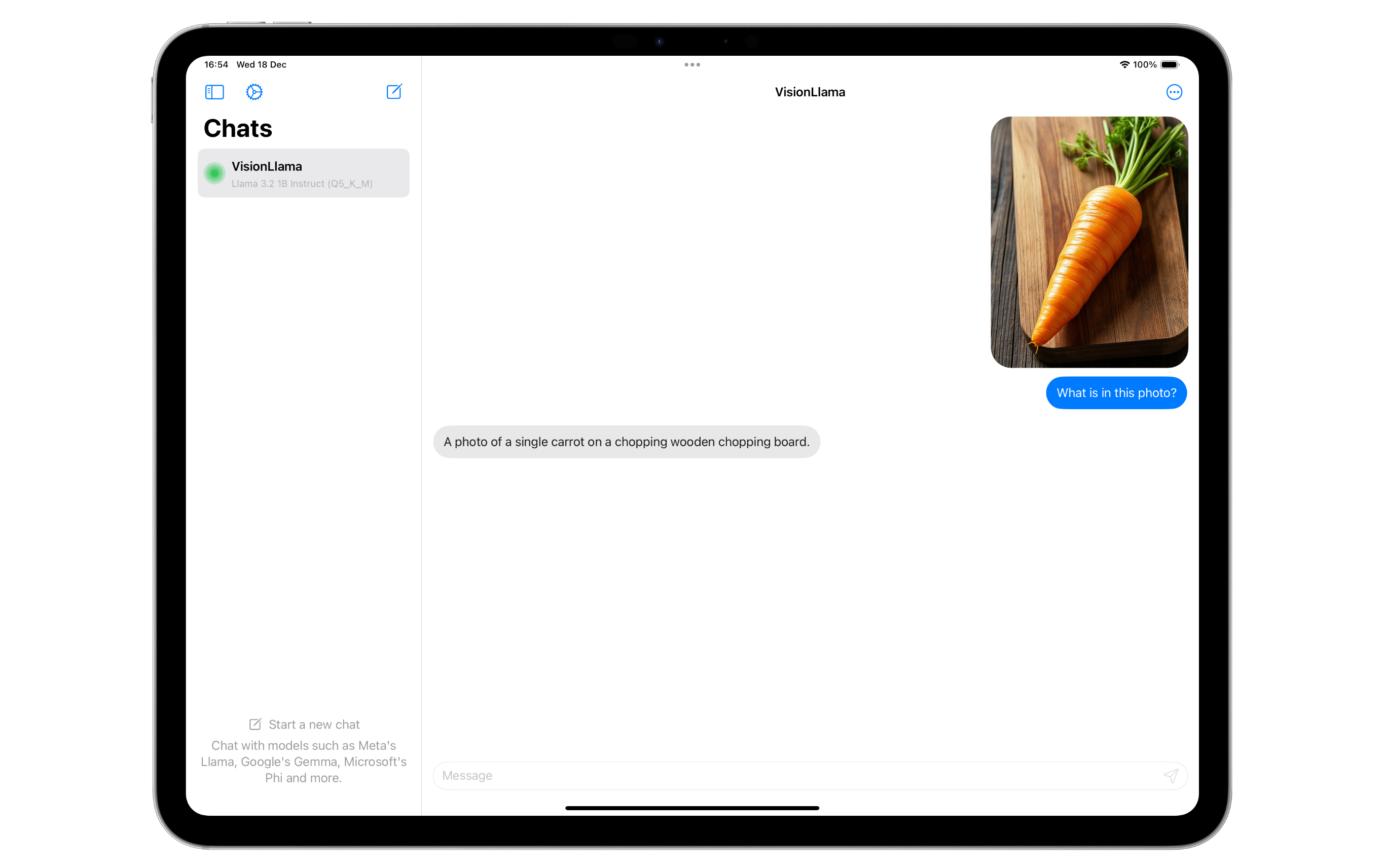

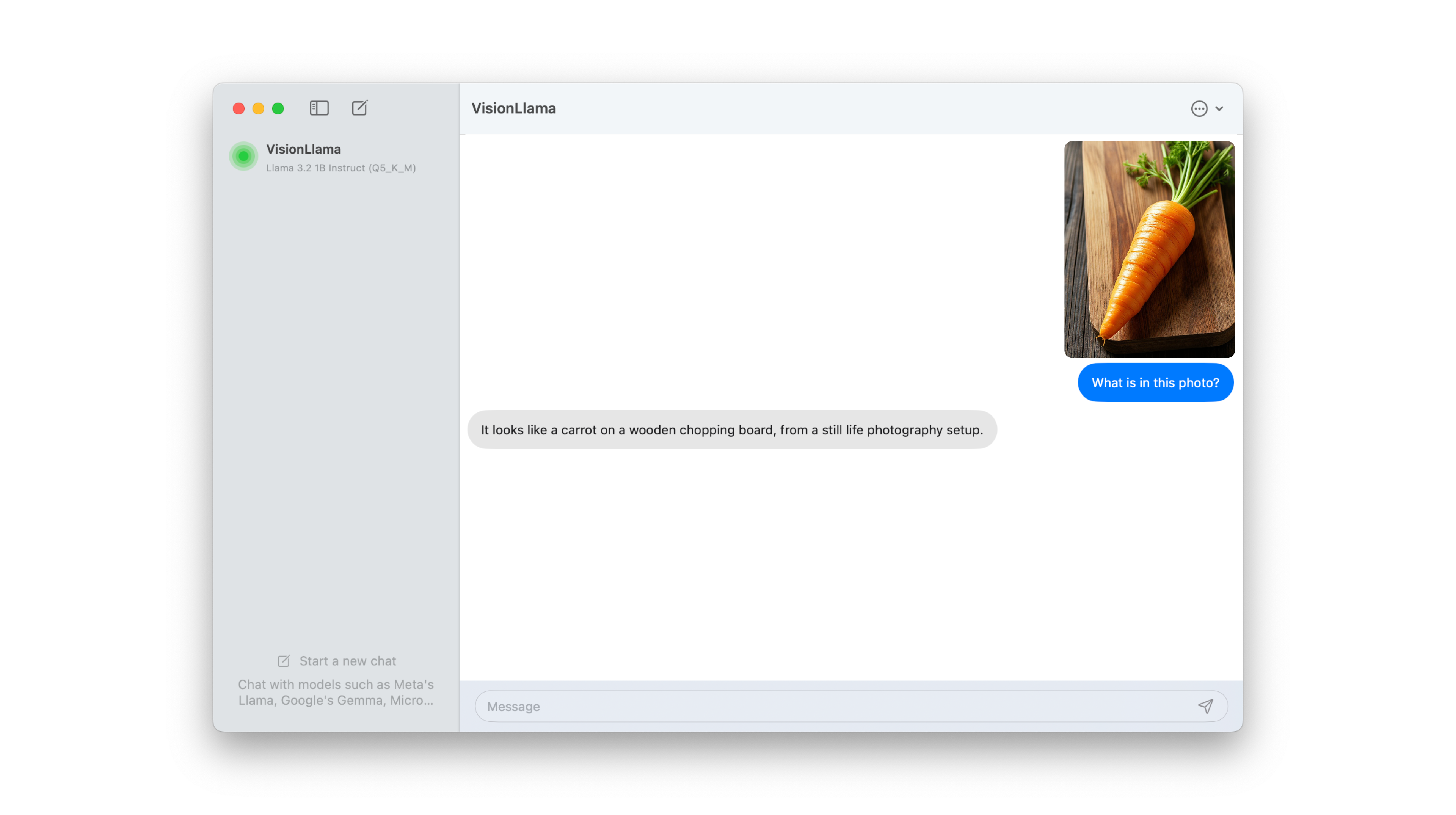

- Multi-modal support e.g. vision models

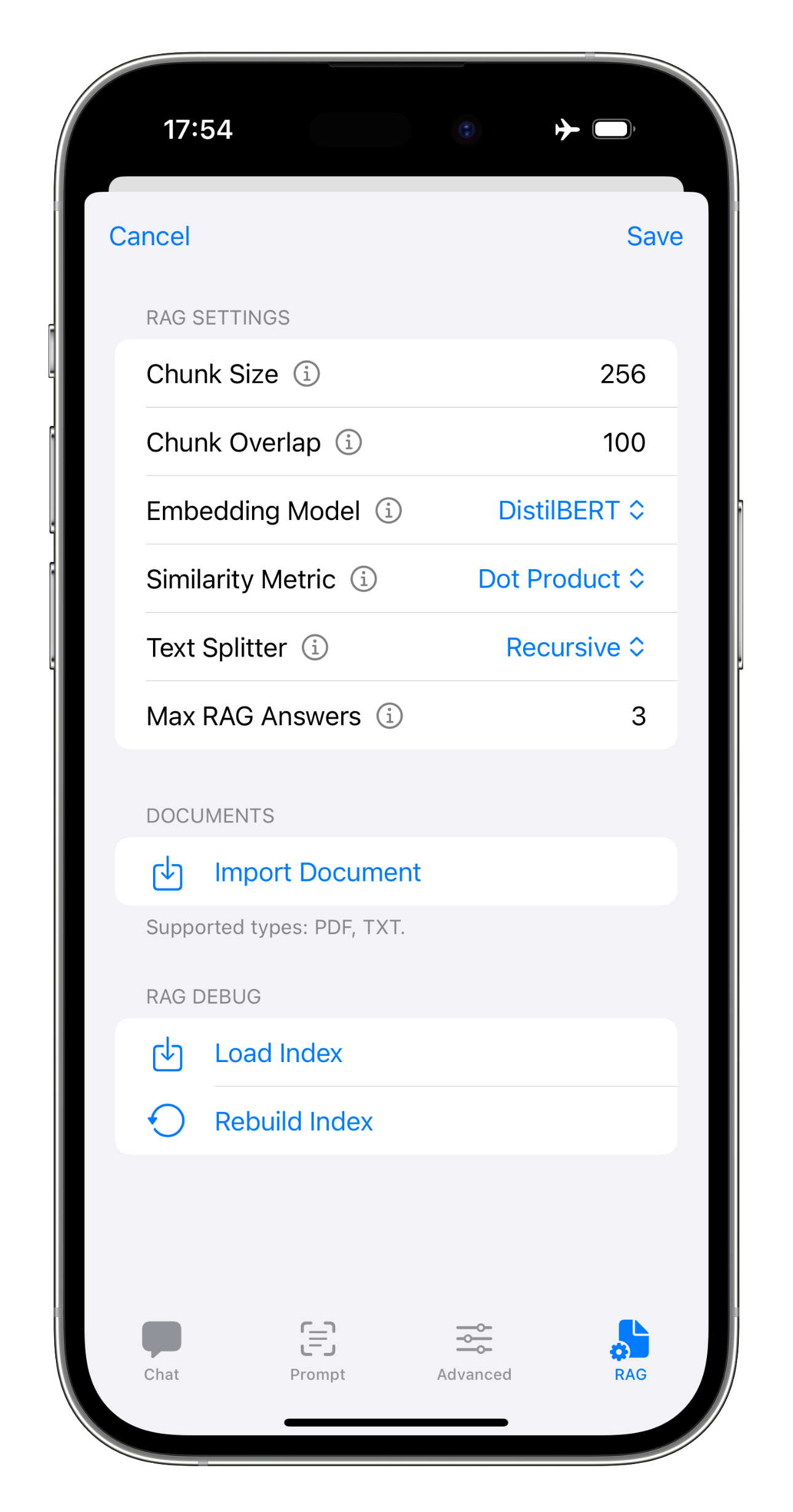

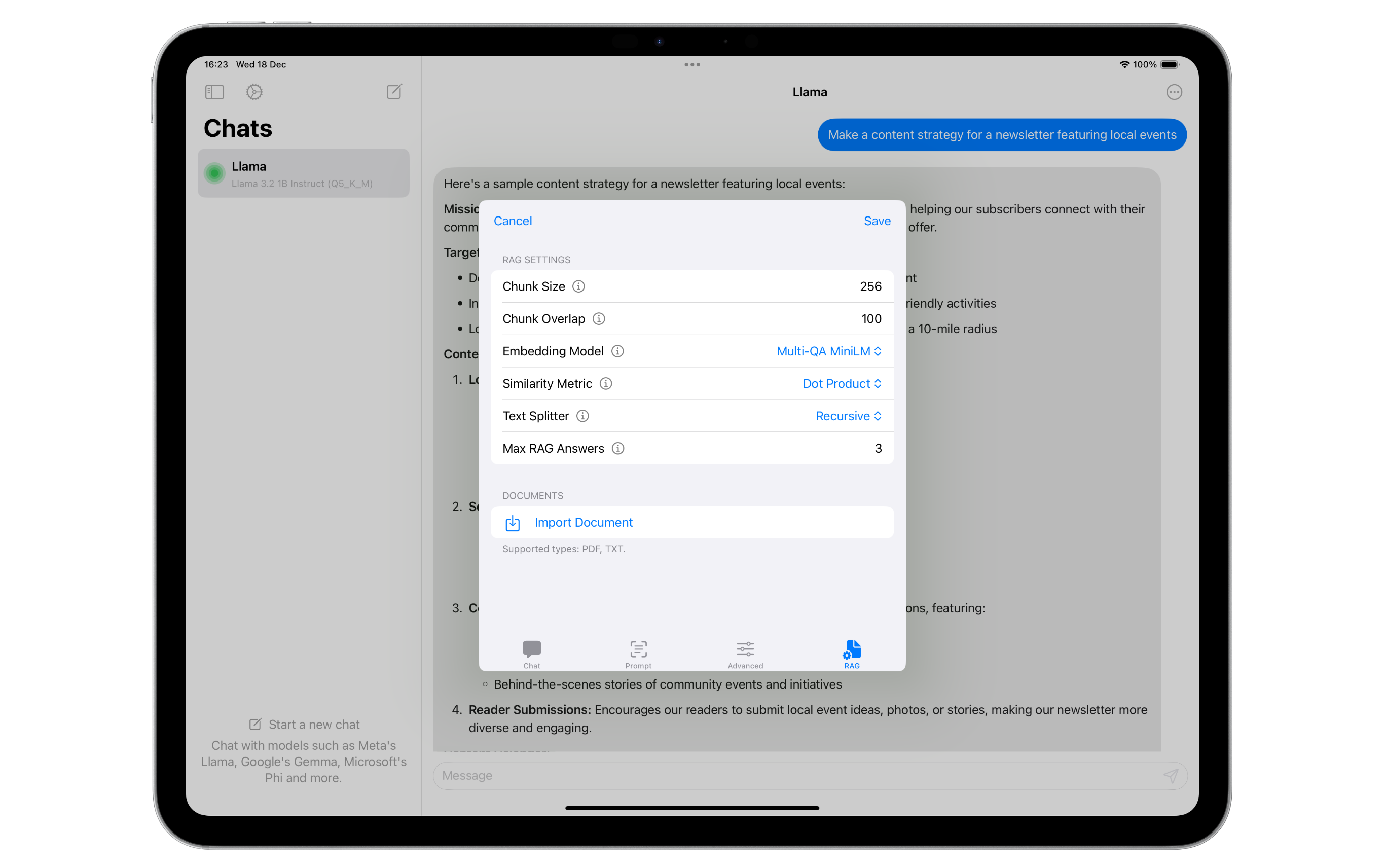

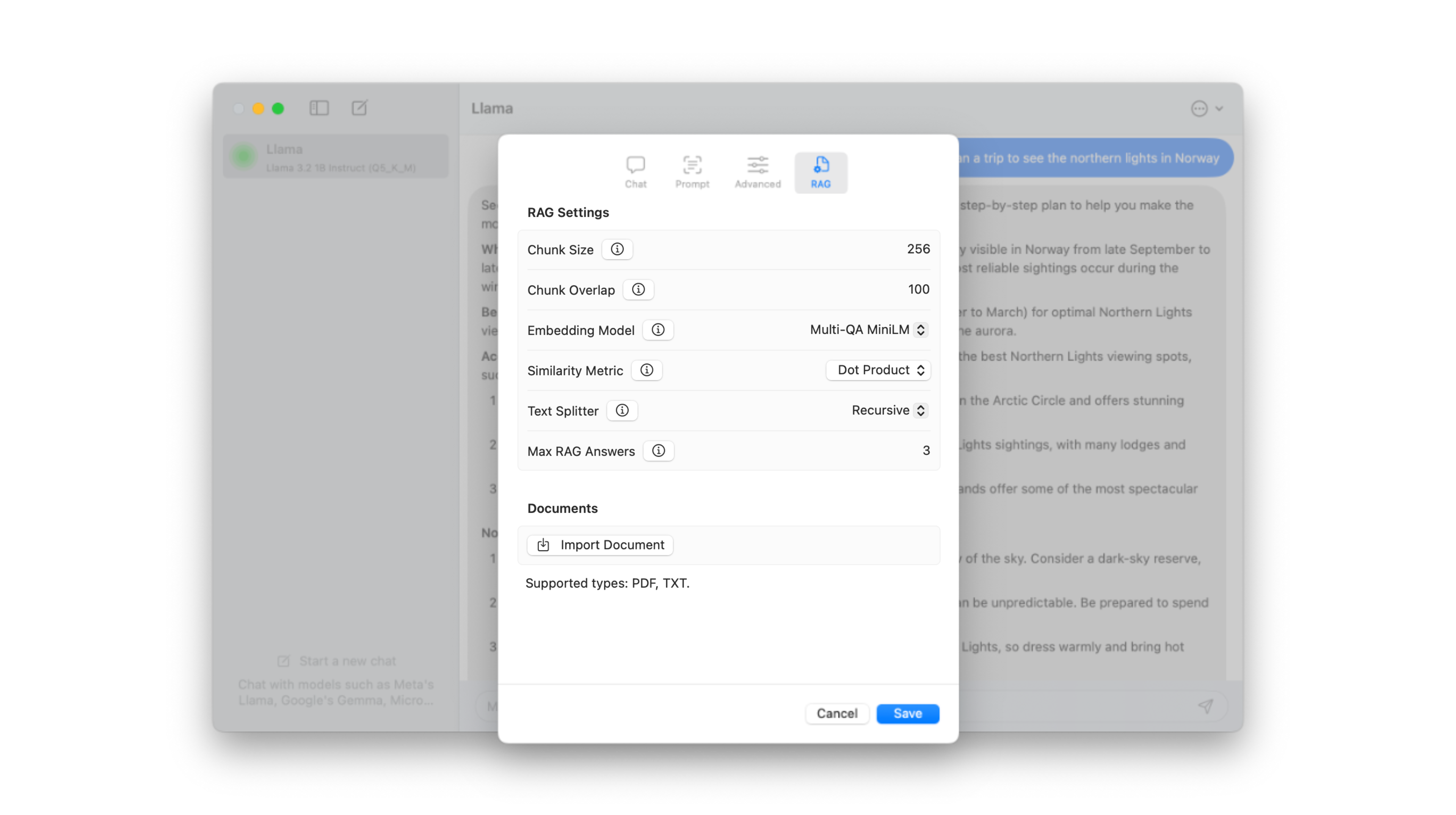

- RAG (Retrieval Augmented Generation) support

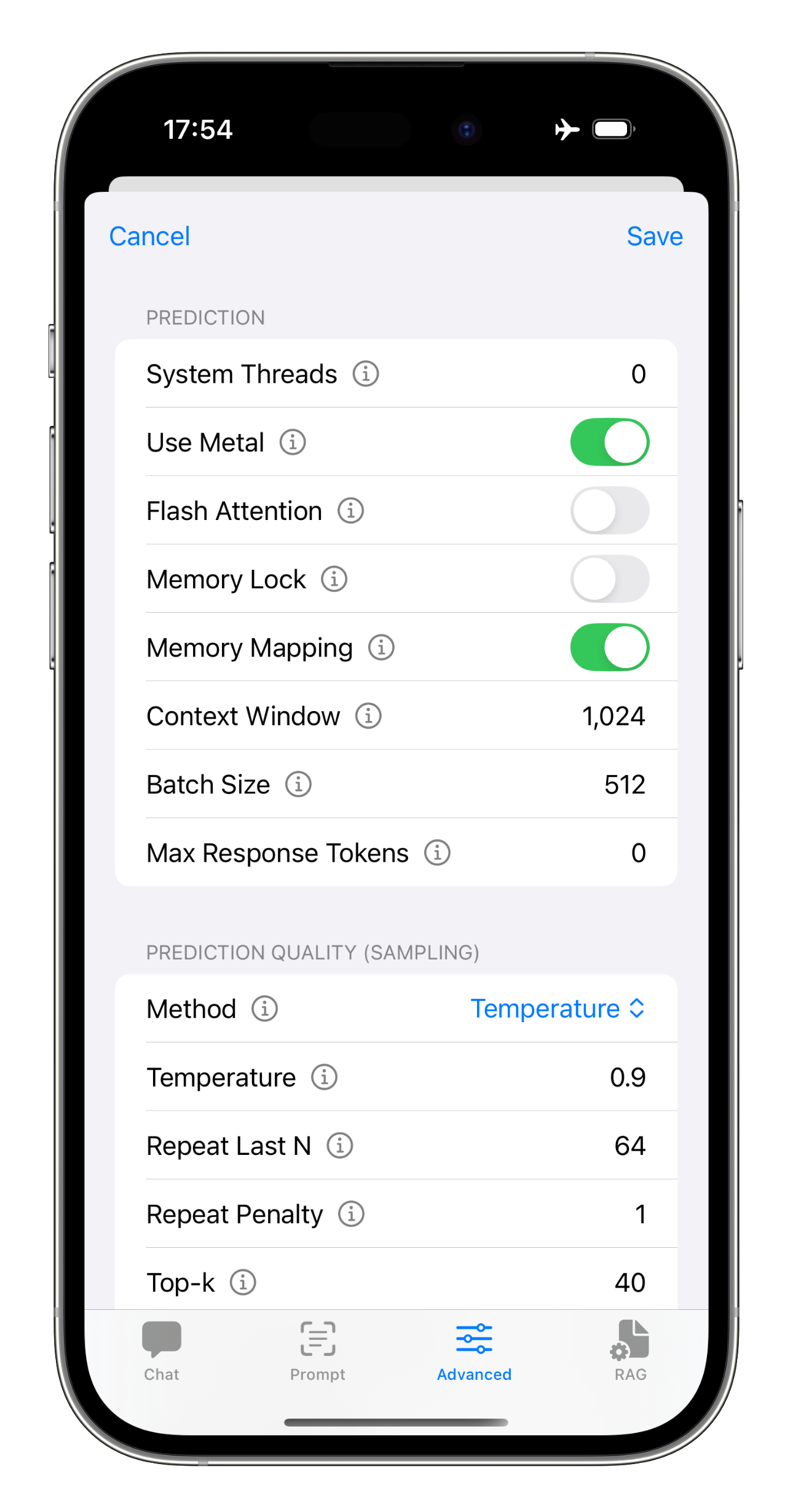

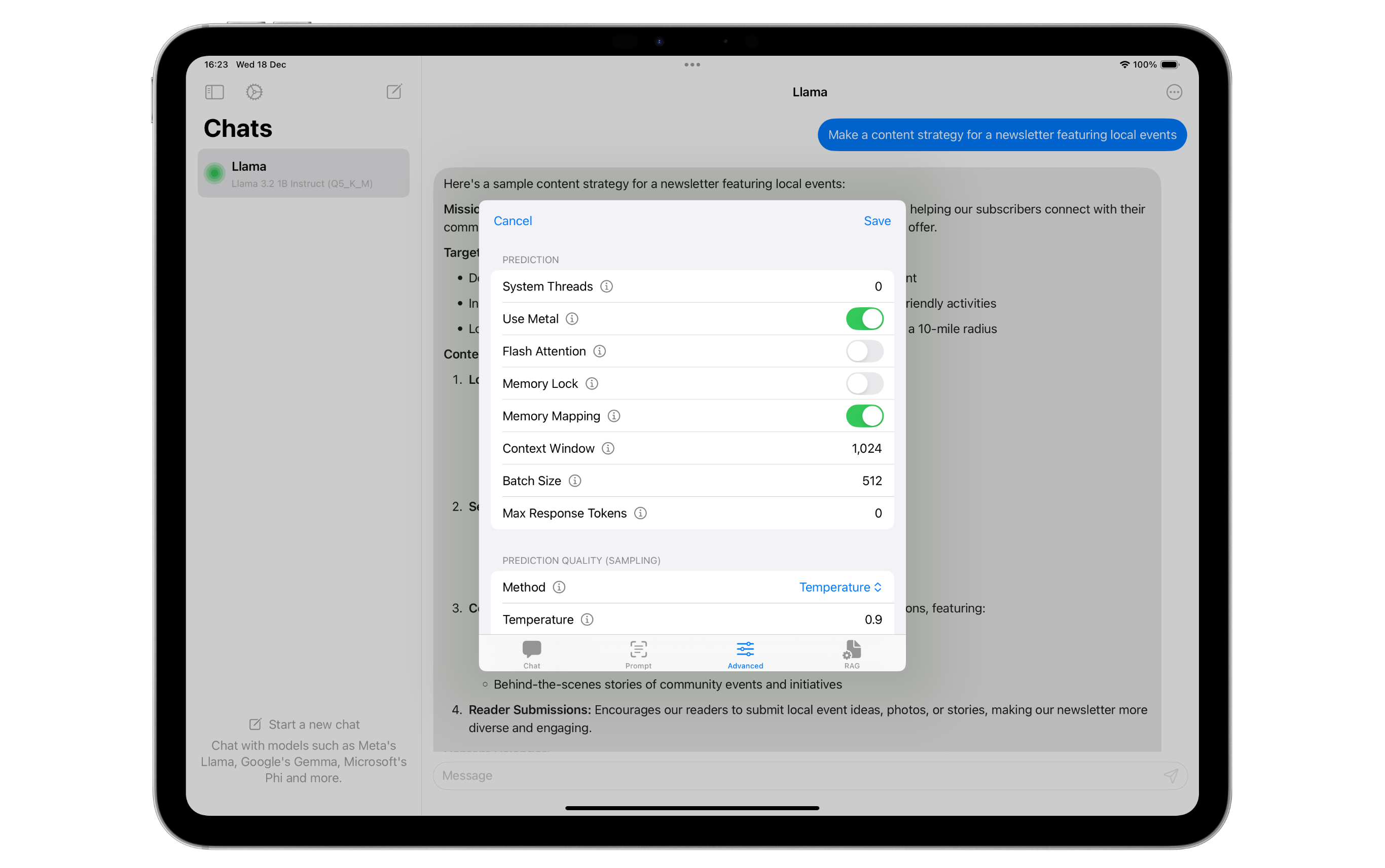

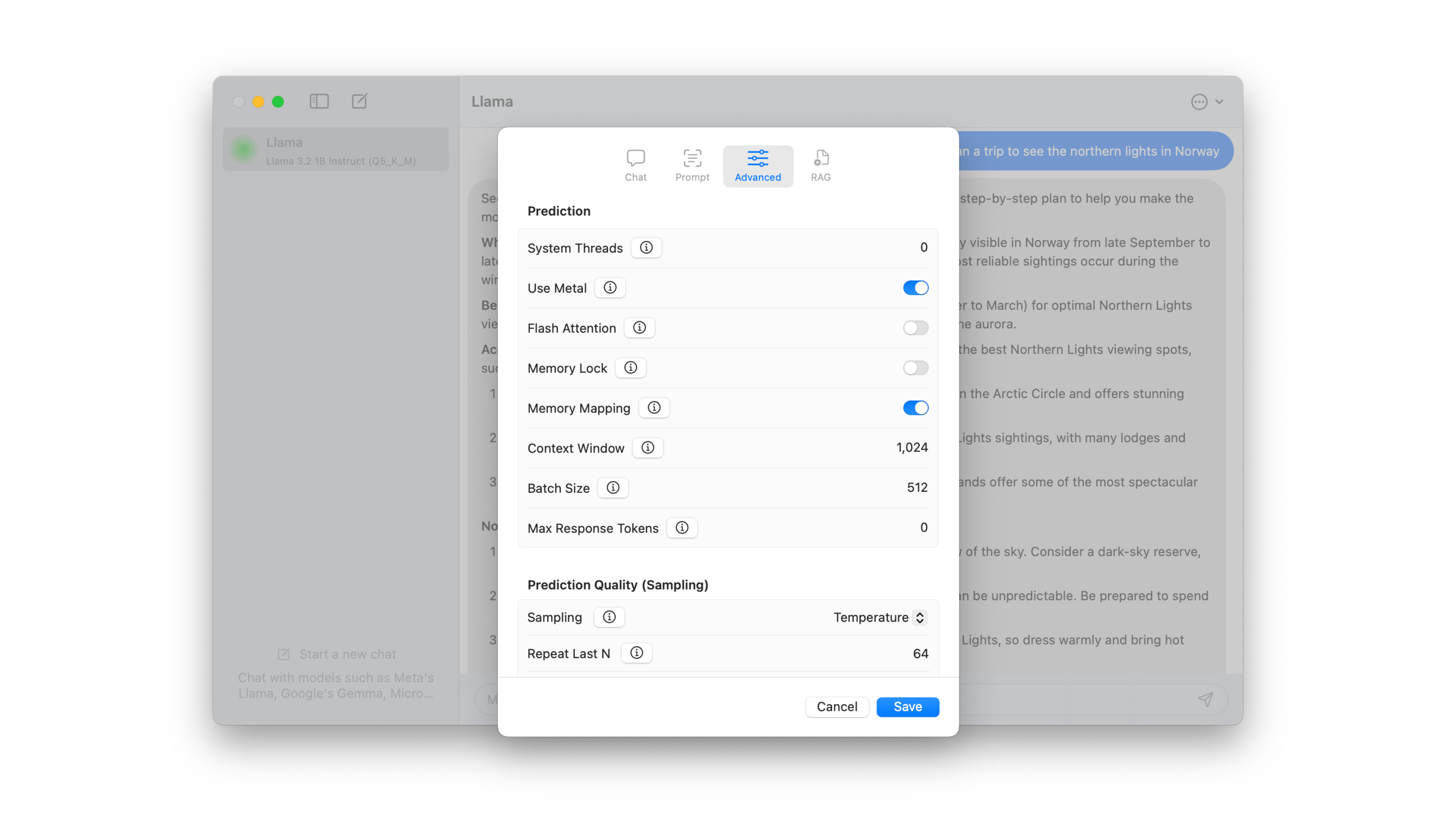

- Tweak execution parameters of the LLM

- Control the system prompt

- Beginner and Advanced modes

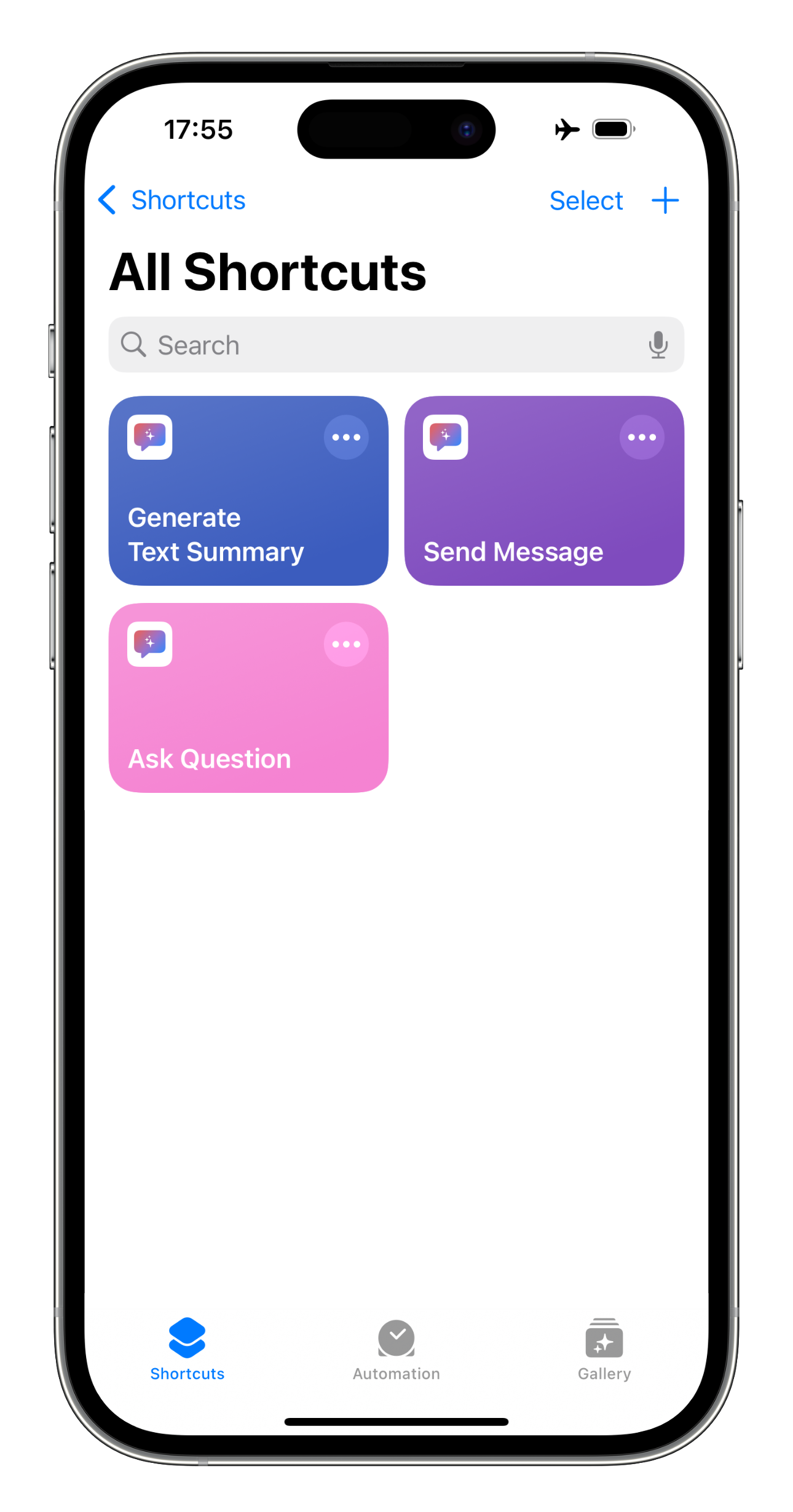

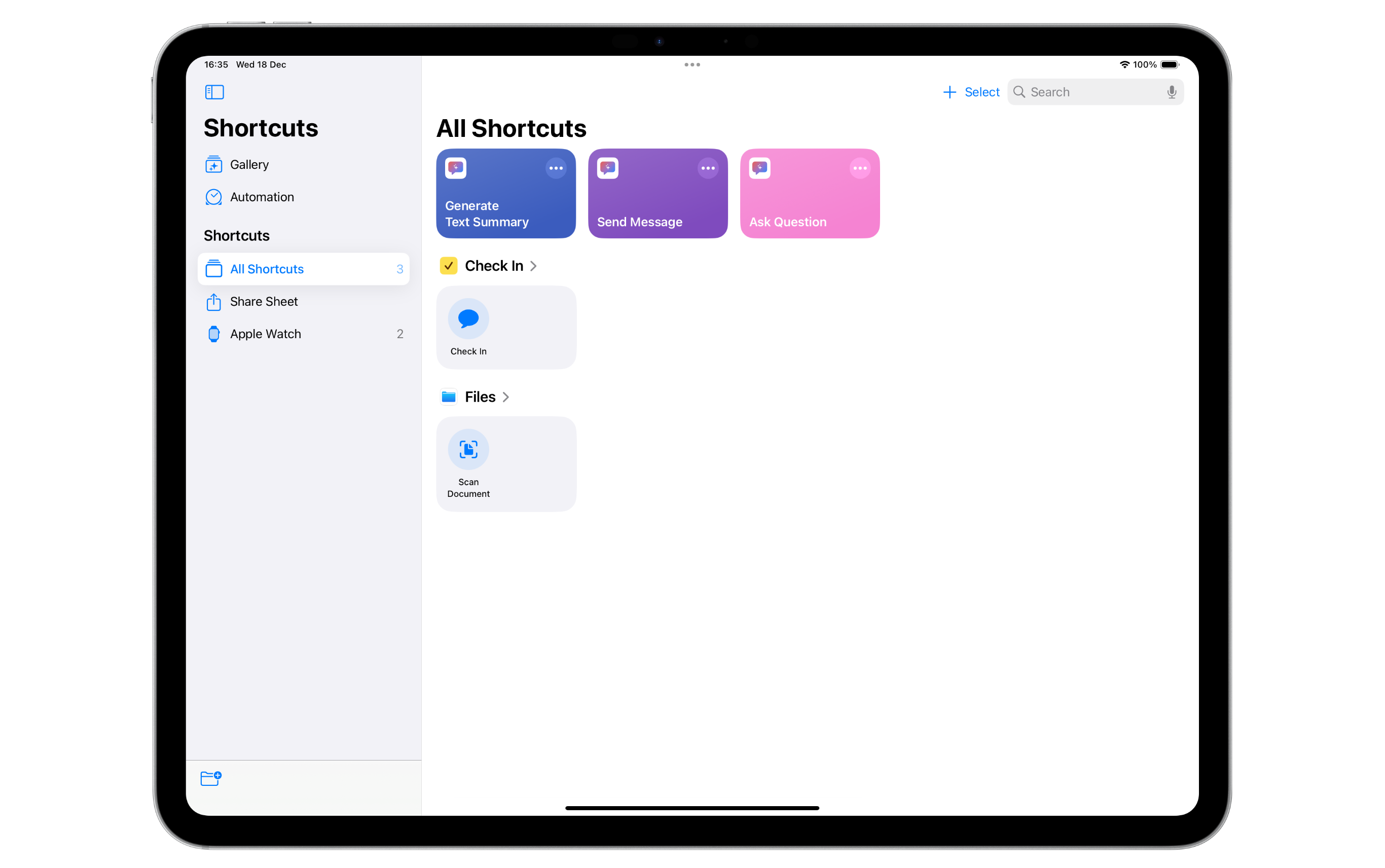

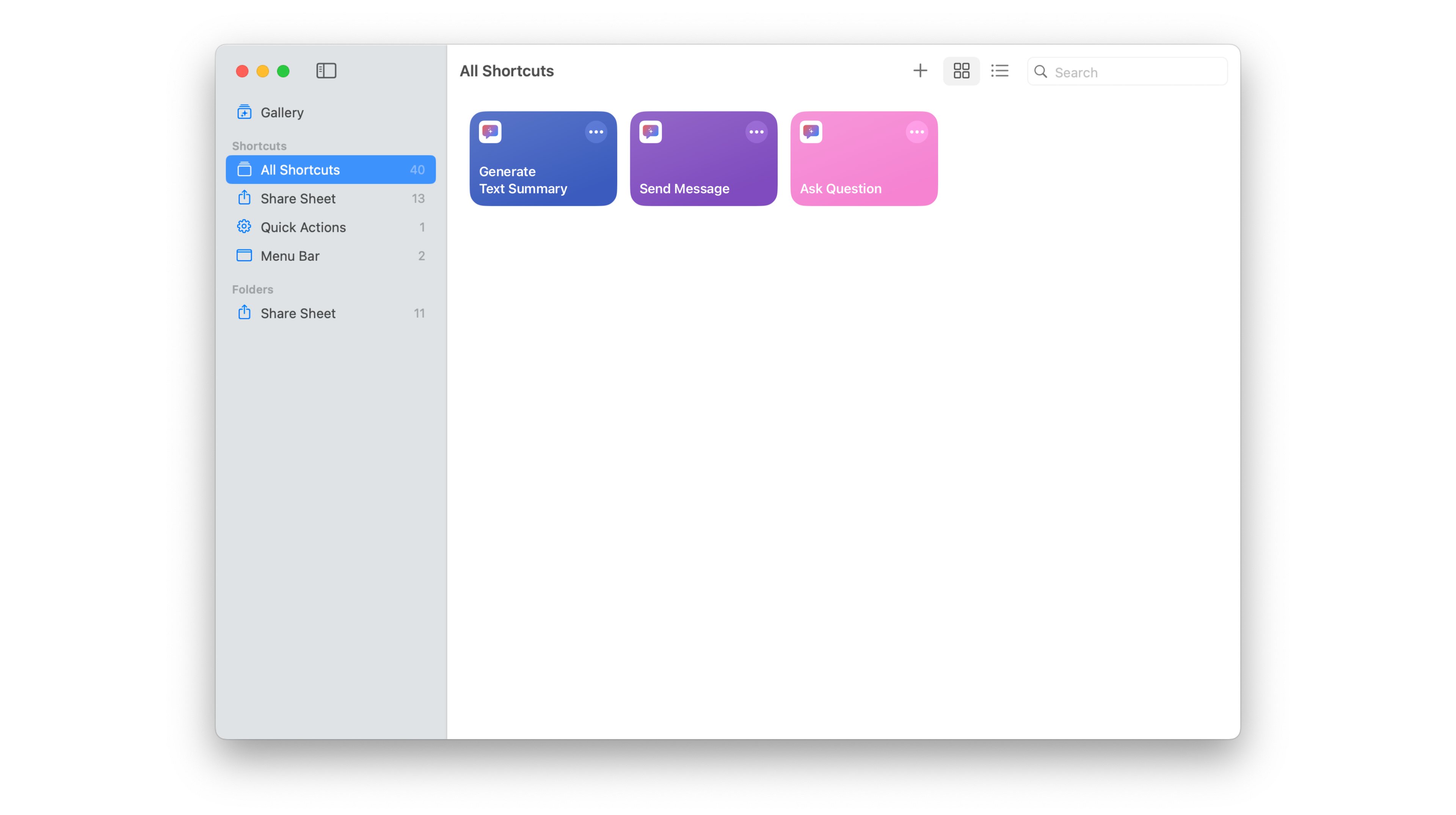

- Siri Shortcuts

- Widgets

- 100% private and offline

- No ads or tracking

- Dark mode

New in Version 3.0

- Live Voice Chat (2 way voice conversations with LLMs)

- Native app for Apple Vision Pro

- Localized in Spanish

Description

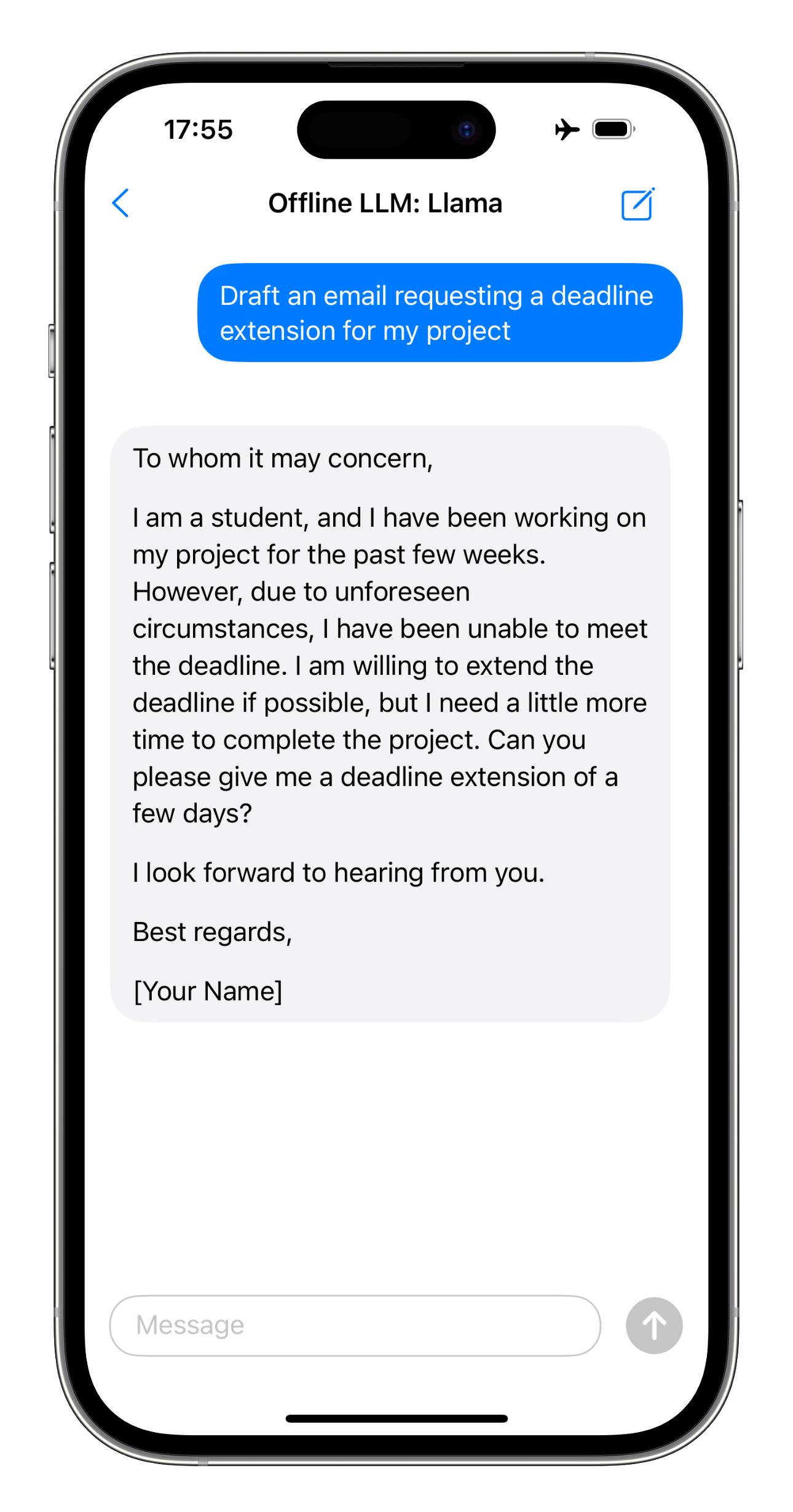

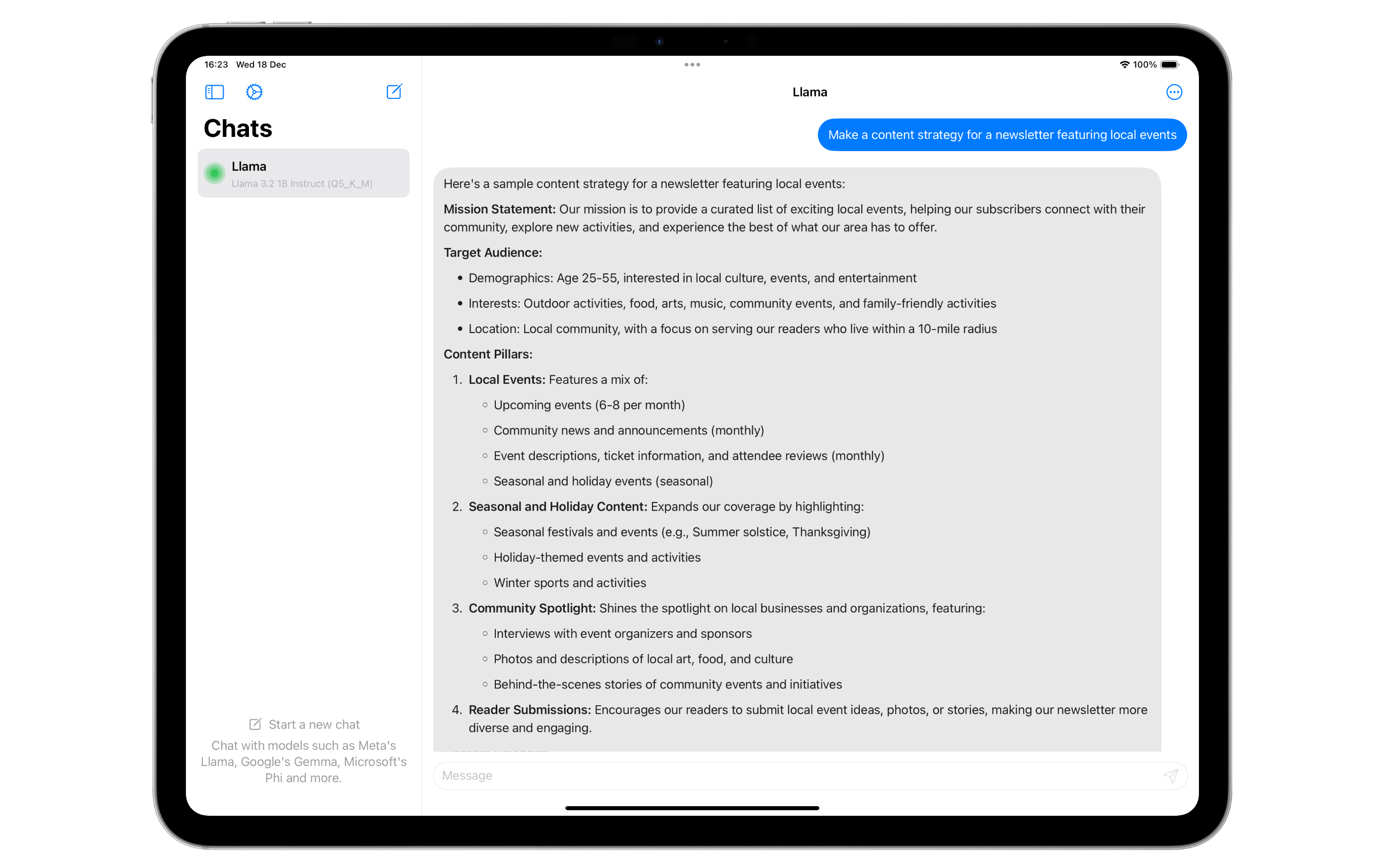

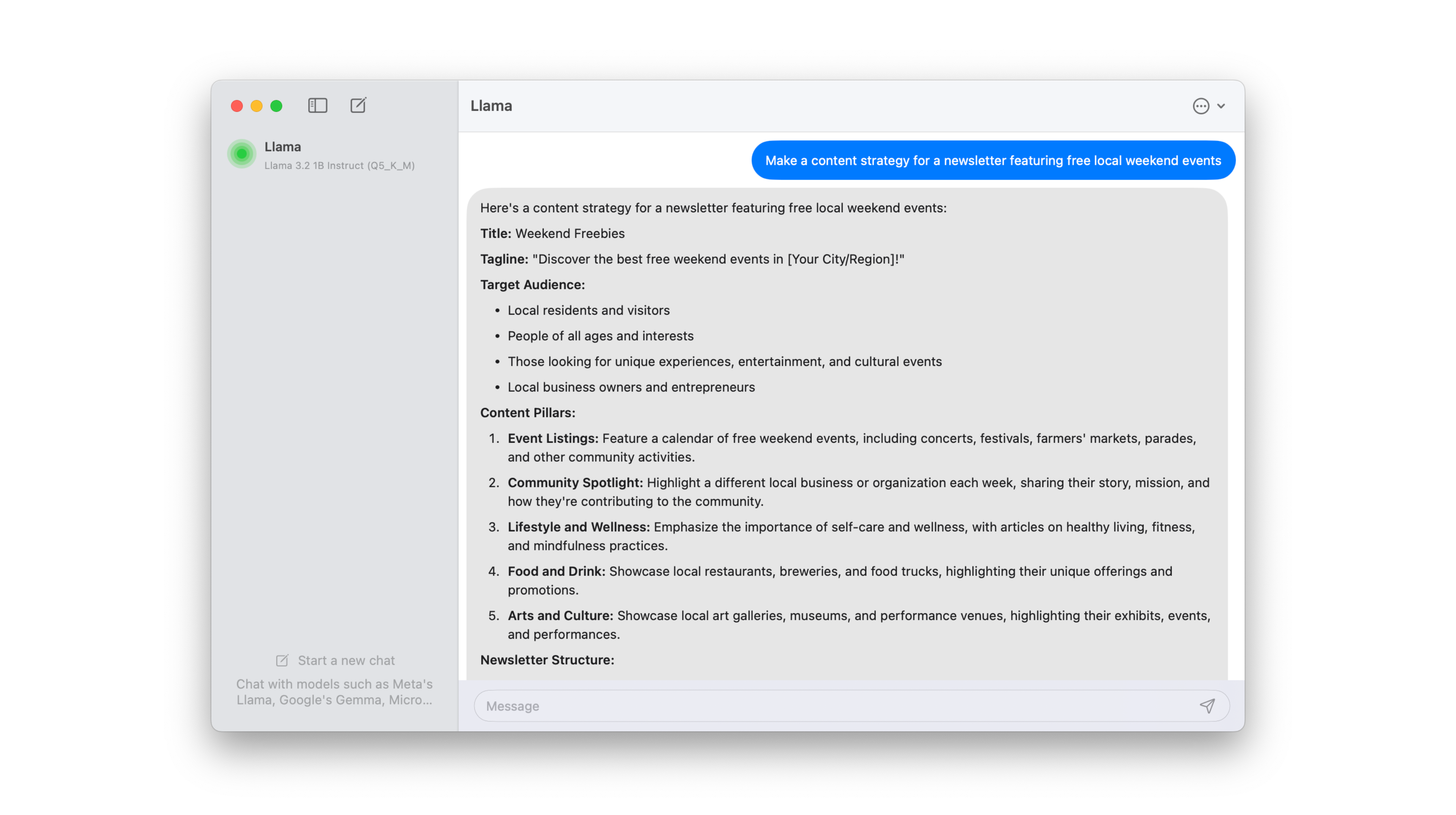

OfflineLLM is the fastest large language model (LLM) engine designed specifically for Apple devices, including iPhone, iPad, Mac and Vision Pro. With OfflineLLM, users can engage in private conversations with AI chatbots without the need for an internet connection, ensuring that sensitive and confidential data remains secure.

The app leverages a custom execution engine optimized for Apple Silicon, utilizing the full power of Metal 3 to deliver unparalleled performance on consumer devices. This innovative technology allows OfflineLLM to outperform existing applications based on llama.cpp and MLC, making it the go-to solution for users seeking efficient and private AI interactions.

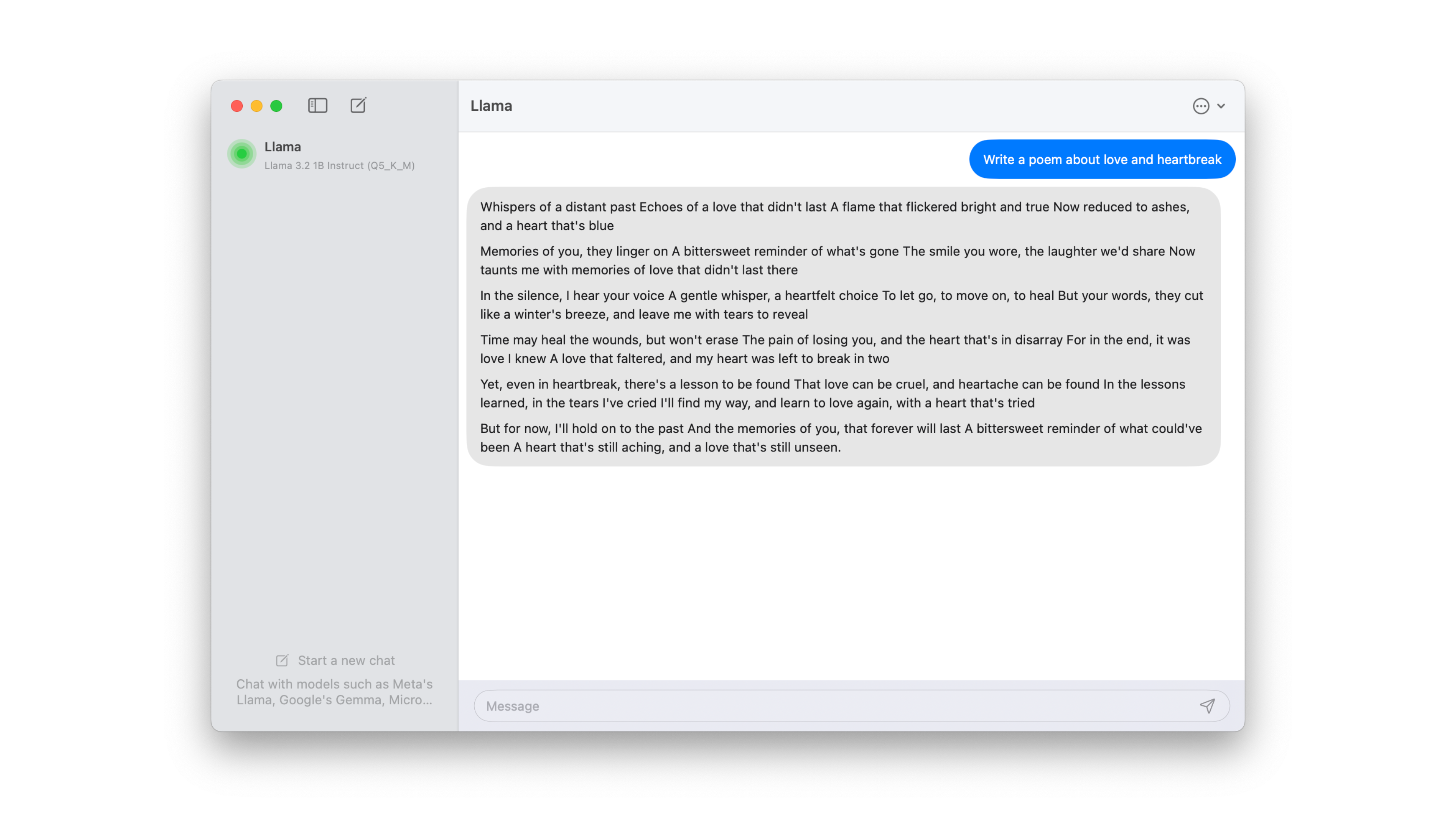

OfflineLLM also introduces multi-modal vision capabilities, enabling users to send images to their offline AI chatbots for enhanced interaction. Additionally, the app features a Live Voice Chat function, allowing for real-time, two-way communication with LLMs. This interactive capability enhances user engagement, making conversations with AI more dynamic and natural.

The app supports Retrieval Augmented Generation (RAG), allowing users to integrate their own documents and files into the LLMs for a more personalized experience.

OfflineLLM caters to users of all skill levels, featuring a Beginner mode for novices and an Advanced mode for experts who wish to fine-tune every parameter of the LLM execution engine. With support for a wide range of state-of-the-art AI models, including DeepSeek, Llama, Gemma, Phi, and many more, OfflineLLM is equipped to handle diverse user needs.

Experience the future of AI interaction with OfflineLLM, where privacy meets performance.

About the Developer

Bilaal Rashid is dedicated to developing innovative software solutions that enhance user experiences while prioritizing privacy and security. With a focus on cutting-edge technology, Bilaal aims to empower users with tools that facilitate creativity, learning, and productivity.

Previously developed apps include the open-source project ReadBeeb and ReminderCal, which has been featured in the The Verge, Lifehacker, 9to5Mac, MacStories and MacRumors, as well as Apple’s App Store editorials “Do more with interactive widgets” and “See what’s new in iOS 17”.

Screenshots

iPhone

iPad

Mac

Apple Vision